Hello, I'm Keisuke Yamashita (keke) from the Verda Reliability Engineering team. In this post, I'd like to describe Verda's journey towards Infrastructure as Code and our vision of resource (for example, virtual machines, load balancers, Redis clusters) provisioning and management on Verda. This entry will also cover the necessary tooling preparations for using Terraform as a platform-wide resource provisioning and management tool.

What is Verda?

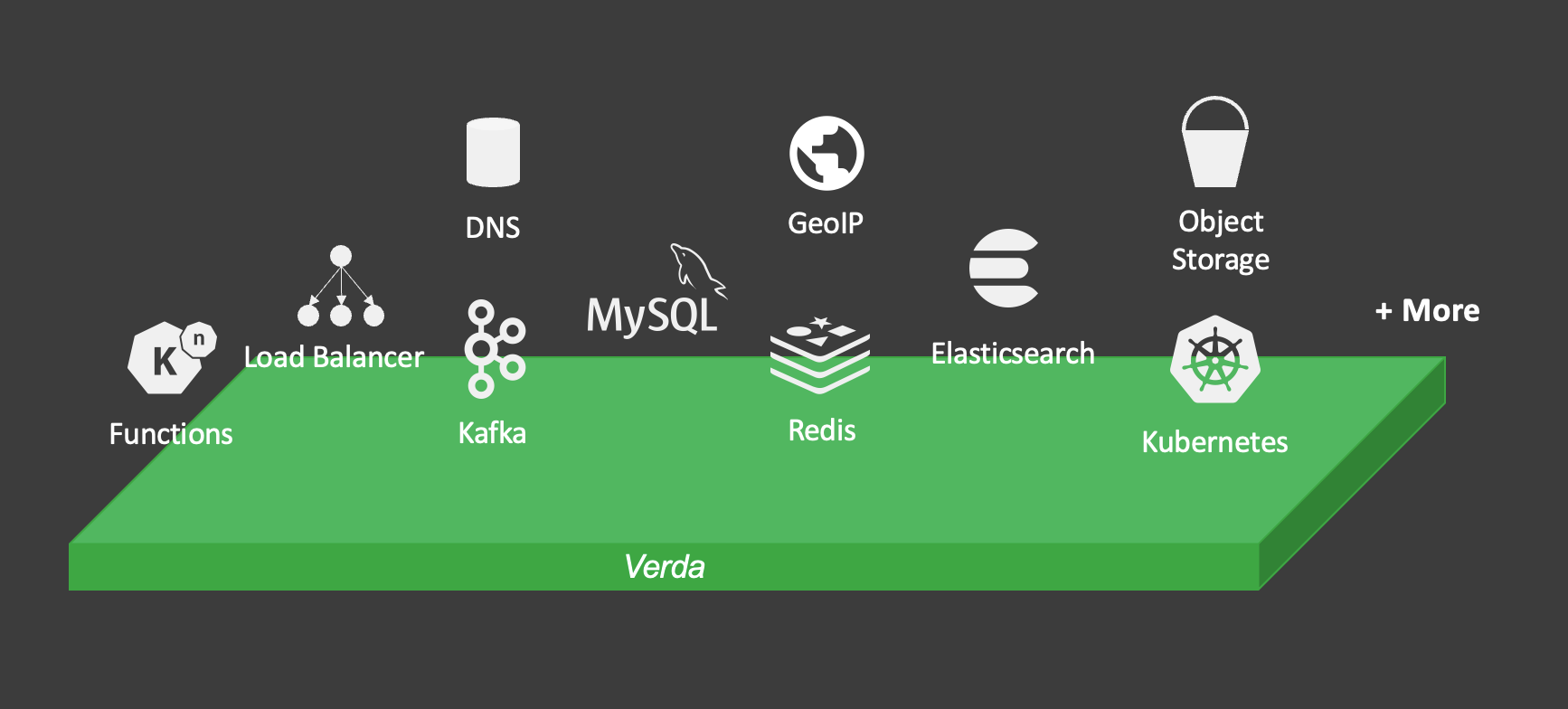

Verda is LINE's private cloud based on OpenStack, a project that began in 2016. It's a public cloud similar to Amazon Web Services (AWS) or Google Cloud Platform (GCP), which provides developers with various products necessary for service development, such as virtual machines, MySQL, and CDN. A wide variety of products are supported, including Verda Kubernetes Service (VKS) for Kubernetes as a Service and Verda Functions Service (VFS) for Functions as a Service, answering the requirements of any service that uses Verda. The developer is left to decide which services to use and how to architect them.

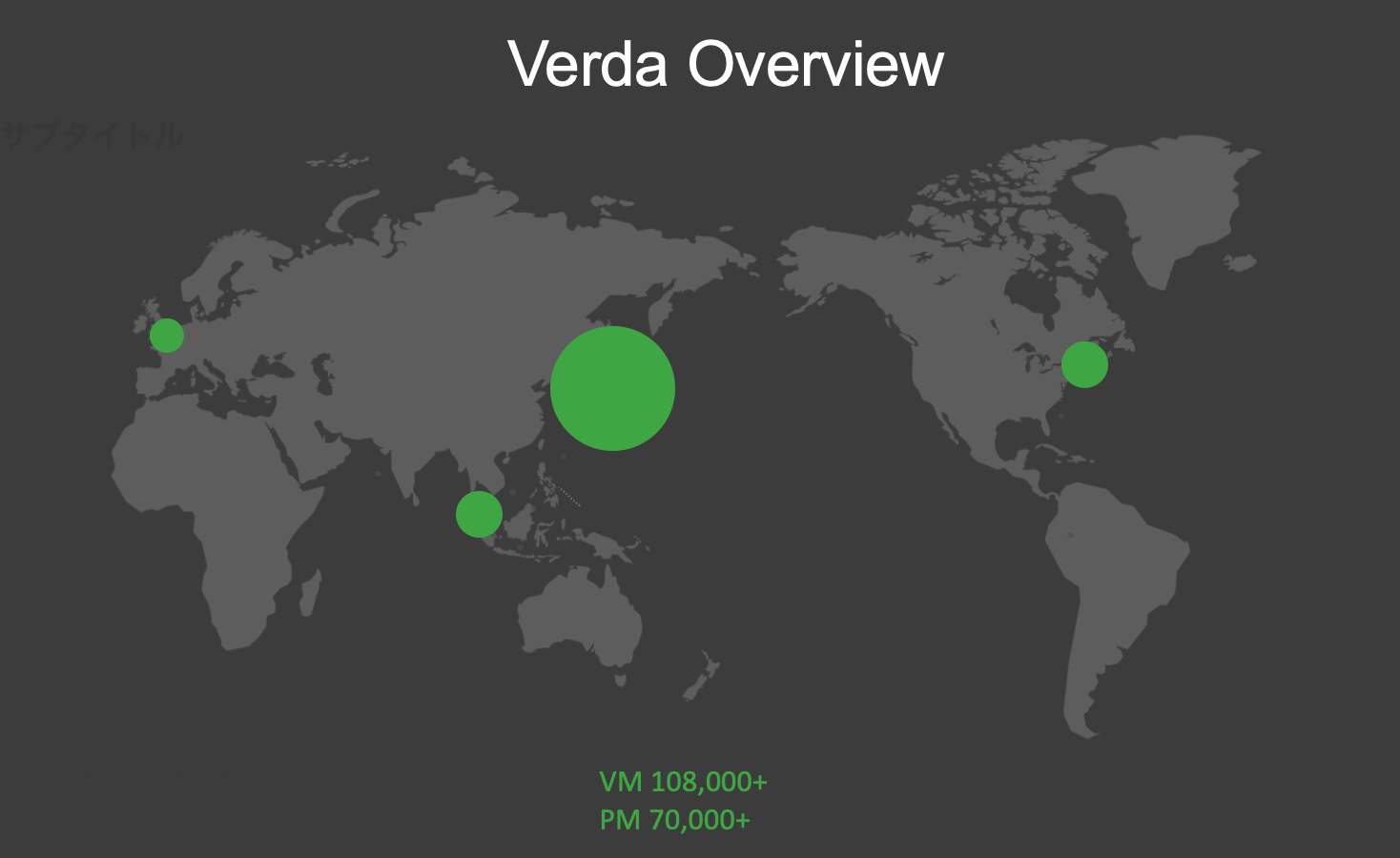

Not all LINE products are hosted on Verda, but various services are hosted using Verda and there are now over 100,000 virtual machines and 70,000 physical machines in use, with numbers increasing every day. Since LINE provides its services in various countries around the world, Verda provides functions in various regions as well.

Developers can create or update resources at any LINE location around the world, no matter when or where they are, to develop LINE services. It provides a platform that allows developers to focus on development, as they do not have to purchase and set up virtual machines from scratch, go to a data center to maintain server racks, or perform basic monitoring which is time-consuming and costly. Thanks to Verda, we can develop at high speeds while maintaining flexibility and responsiveness in today's rapidly changing society.

How resources were provisioned and managed

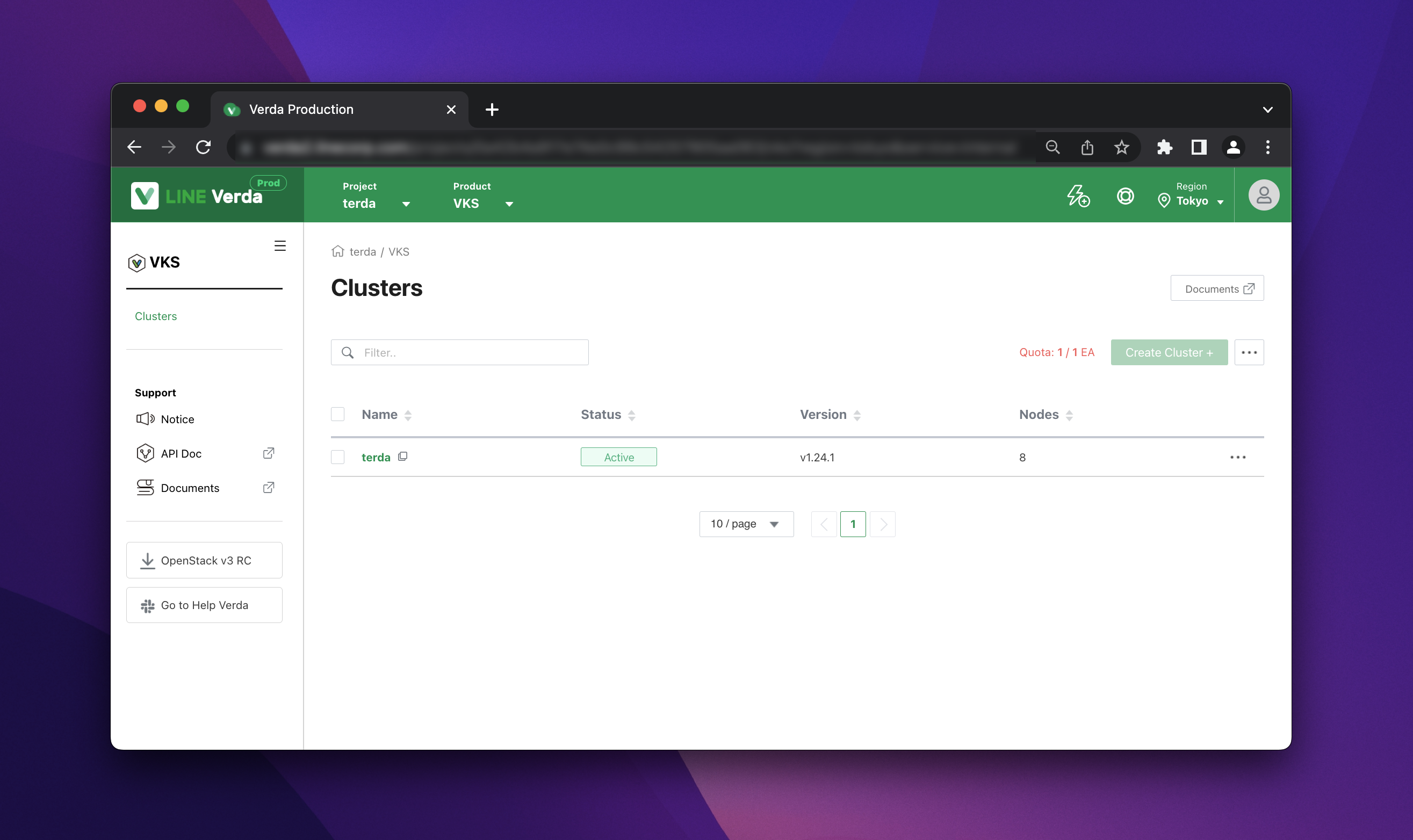

Relatively speaking, Verda is a fairly large private cloud. Until we started working on Infrastructure as Code, all resources that Verda developers create for their projects were 100% manually created though a web console called the Verda dashboard, which you can see in the screenshot below.

In typical projects at LINE, developers create virtual machines or VKS clusters to host their applications and Verda object storage (VOS) buckets and CDNs to distribute their static content. All these resources used to be created manually. Every day, developers would log in to their projects in the Verda dashboard and perform various operations on each Verda product's page to managed the resources they need for development. Just to be sure, of course, there is authentication and authorization as in the public cloud, and developers can operate within Verda's project according to their permissions.

Problems and Infrastructure as Code

We have been building resources in this way at Verda for more than six years, and more than a decade if you include non-Verda LINE resources. We have been doing it this way for a long time, and many of our employees thought there was nothing wrong or missing.

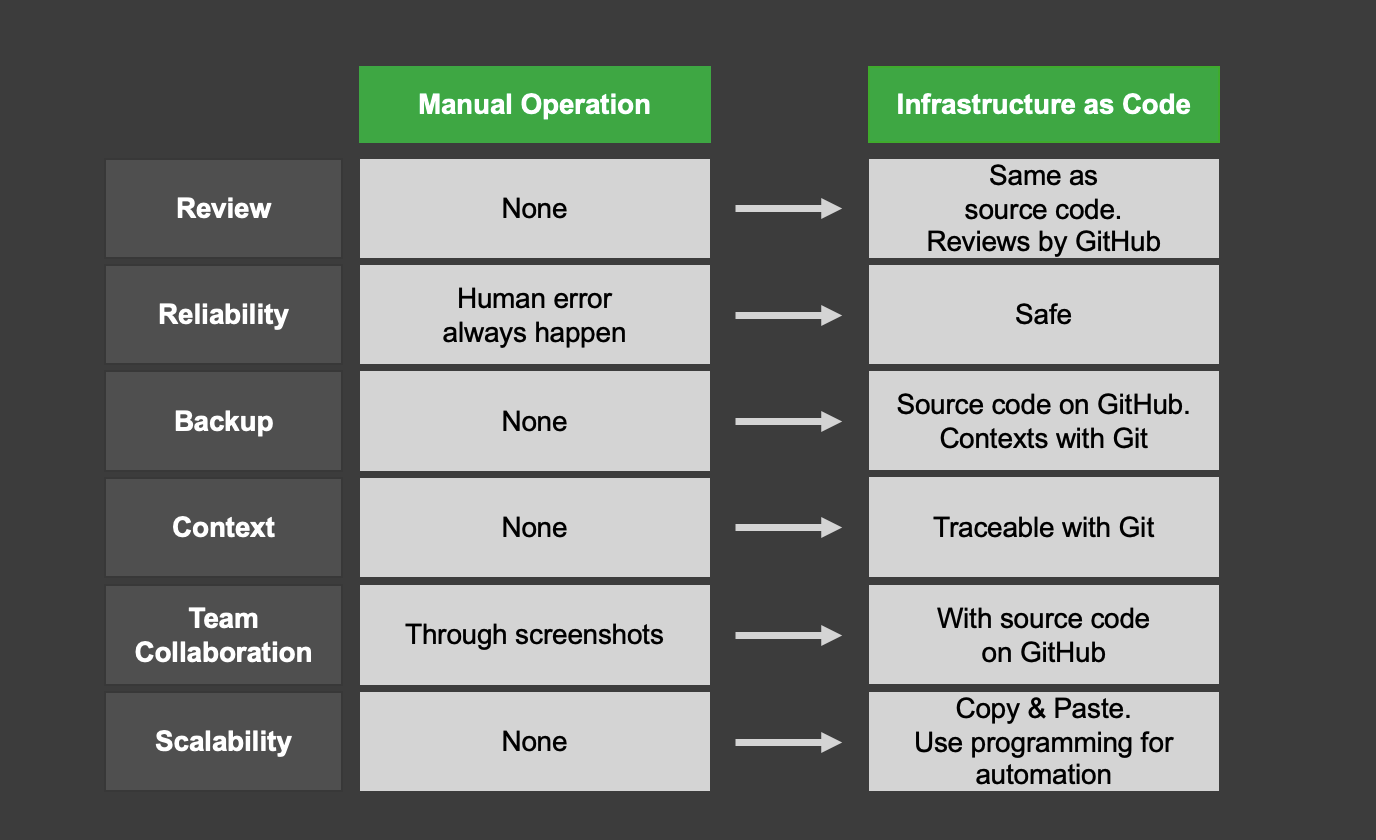

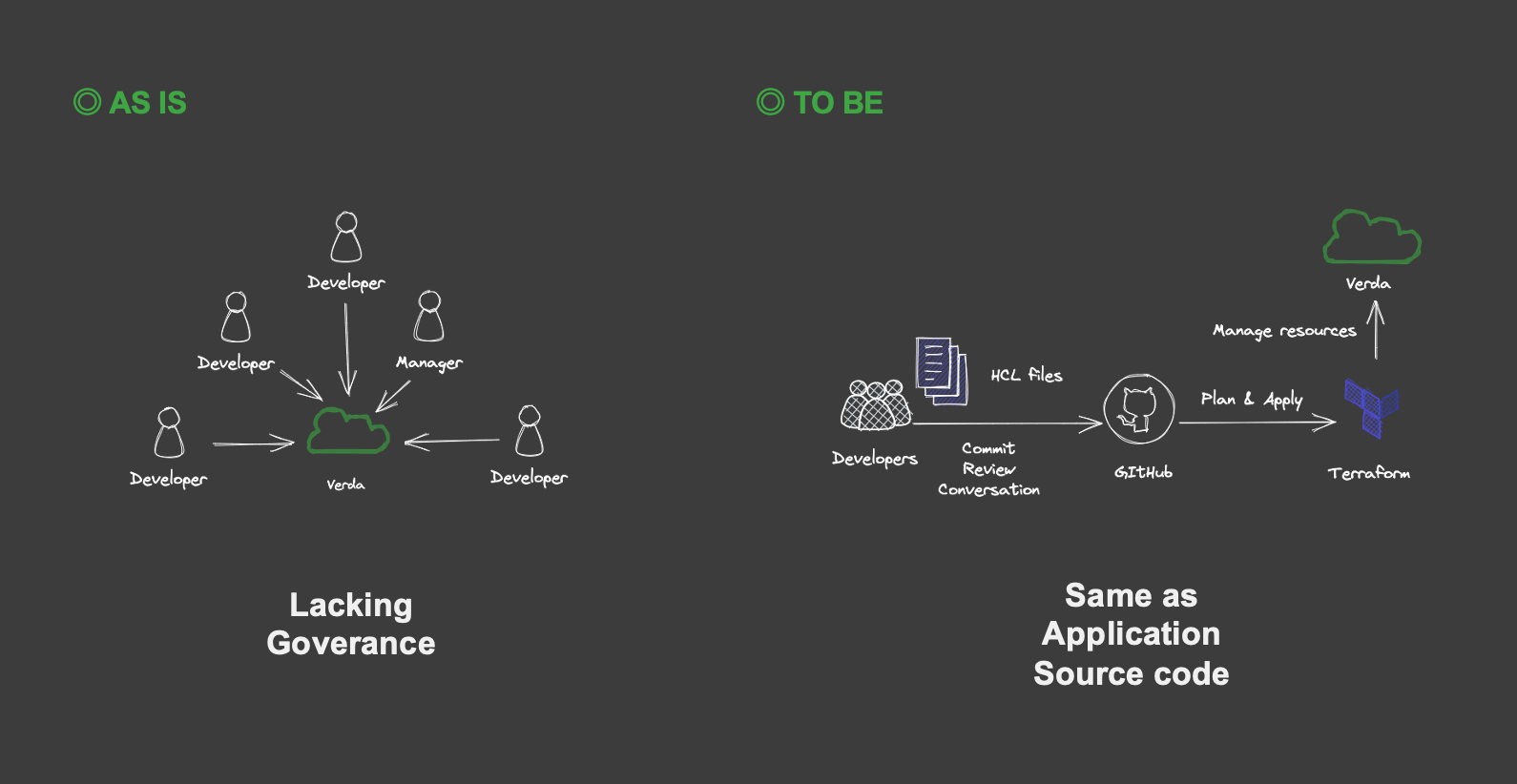

In the cloud-native era, the lifecycle of each resource has become shorter, resources are consumed more on-demand, and changes in settings and other aspects are more frequent. As a result, how we manipulate infrastructure resources has become a very important factor in software development. The same can be said for LINE. This is because as private clouds like Verda are created and grow in scale, there will be exponentially more operations on more resources. In this context, provisioning and managing resources one by one with an interactive tool like the Verda dashboard poses some problems and risks. When so many resources are created, modified, and deleted every day, flipping buttons on a dashboard is far too much of an inefficient and insecure method. Operations on the dashboard may not have gone through reviews, or there may be no metadata about why, how, or for what the resource was created. There are also many problems in running a safe development cycle with a team, such as the possibility of conflicts caused by multiple members. The operation is not reproducible and there is a risk of human error.

Infrastructure as Code has emerged to solve these problems. Infrastructure as Code solves these problems associated with traditional resource provisioning and management, enabling developers to quickly spin up a fully-documented and versioned resource by running a script. It's managed in source code on the version control system (VCS), allowing reviews on GitHub, and collaboration can be done on source code that is source-of-truth.

In fact, the key is how to achieve Infrastructure as Code, and we are doing this using Terraform, which is developed by Hashicorp. Terraform is a de facto standard and one of the prominently used tools for Infrastructure as Code. Its generic Hashicorp Configuration Language setting allows for declarative management of any resource, regardless of vendor. With its pluggable plugin feature, Terraform allows platform providers to add functionality to any platforms. We were able to make Terraform compatible with Verda by developing a plugin (custom provider) for Verda.

How it started

We have taken many efforts such as demonstrations, hands-on sessions, and presentations to spread the importance and value of Infrastructure as Code and its practices in the execution of our project. However, in order to use Terraform (or any other Infrastructure as Code) on a private cloud, we needed to be prepared. If you are using a public cloud, you do not need to think about these things because they are often provided, but if you are using a private cloud, you need to design and implement them.

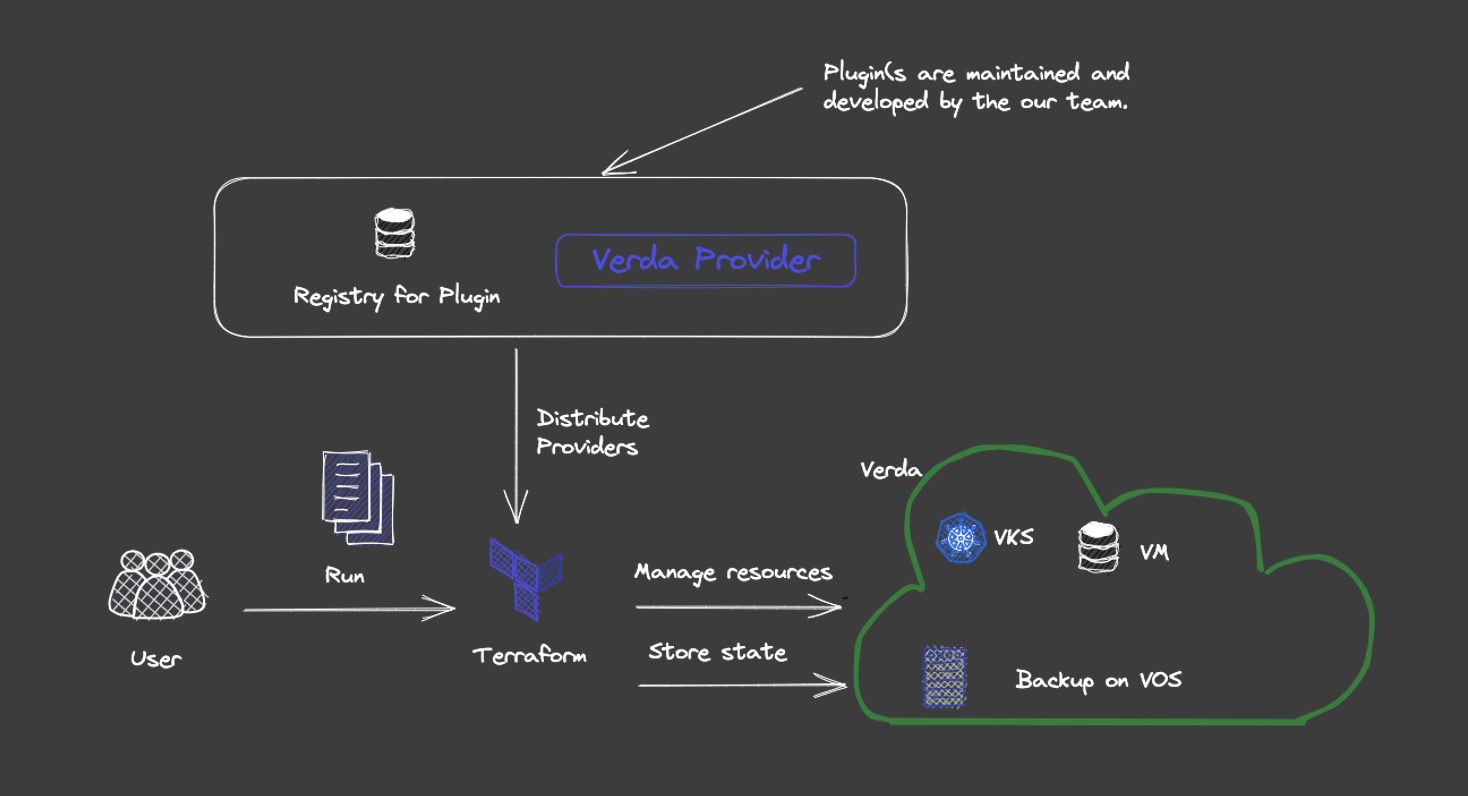

Private Terraform registry

The same is true for using Terraform for internal APIs or other similar uses even if you are using the public cloud, but if you are not exposing it externally, you may not want to expose the provider either. Generally, the providers are publicly available in Hashicorp's official Terraform Registry and can be used by anyone. If you use Terraform Cloud, they also have registry functionality. However, this time we needed to host it ourselves, so we implemented a registry server that complies with Terraform's Module Registry Protocol and Provider Registry Protocol and hosted it on Verda's VKS cluster.

The backend uses VOS, which is Amazon Simple Storage Service (S3) compatible, and provider binaries and other data are stored there. When Verda developers run Terraform, they query our registry for the provider binary, obtain the provider, and then communicate with Verda. The developer needs to specify the URL of the registry, but other than that, nothing else needs to be done, and Infrastructure as Code can be implemented in Verda.

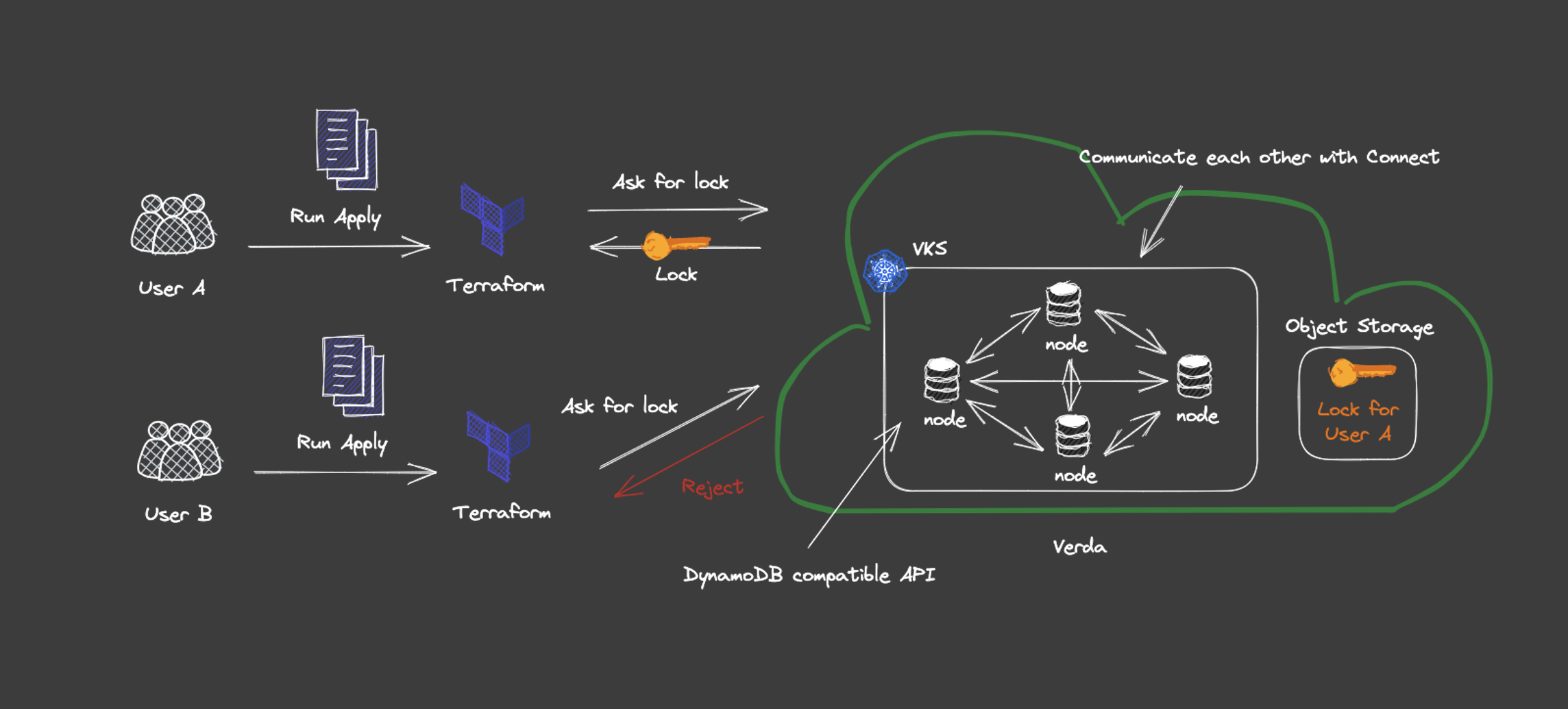

Offering Terraform locks for safer consistent operations

When Terraform performs a write operation, it locks a file that represents the current state, called the Terraform state, by default. The type of locking depends on the backend used, for example, with GCS, locking can be achieved by itself, and with S3, locking can be achieved using Amazon DynamoDB. In the case of a private cloud, these options are not available, so other Kubernetes or HTTPS backends must be used, but hosting them for each team of developers can be burdensome, so they must be provided as a platform.

Fortunately, Verda has S3-compatible object storage VOS that can be versioned, so we decided to recommend S3 as the backend and provide a DynamoDB-compatible locking mechanism for locking. There were already open source DynamoDB-compatible databases, such as ScallyDB, but they could not be used as they did not even meet the minimum requirements for authentication and authorization unless they were commercial versions. Since we still have a small team, we tried to see if we could provide a locking mechanism without having a database. We designed and implemented a locking mechanism with reference to MINIO's dsync and the Gossip protocol used in Hashicorp Serf. By implementing a DynamoDB-compatible API and configuring clusters, the locking mechanism is more scalable and highly available. The design of these details is a long story and will be presented at another time.

The nodes that make up the cluster communicate with each other using Connect (gRPC compatible HTTP APIs from Buf), which reduces the load on the traffic that typically causes bottlenecks. This is a bit off topic, but Connect is compliant with HTTP/1.1 and can be called by experience like a RESTful API, making it very easy to develop with.

The system was hosted on VKS and was made available to developers with multi-tenancy architecture.

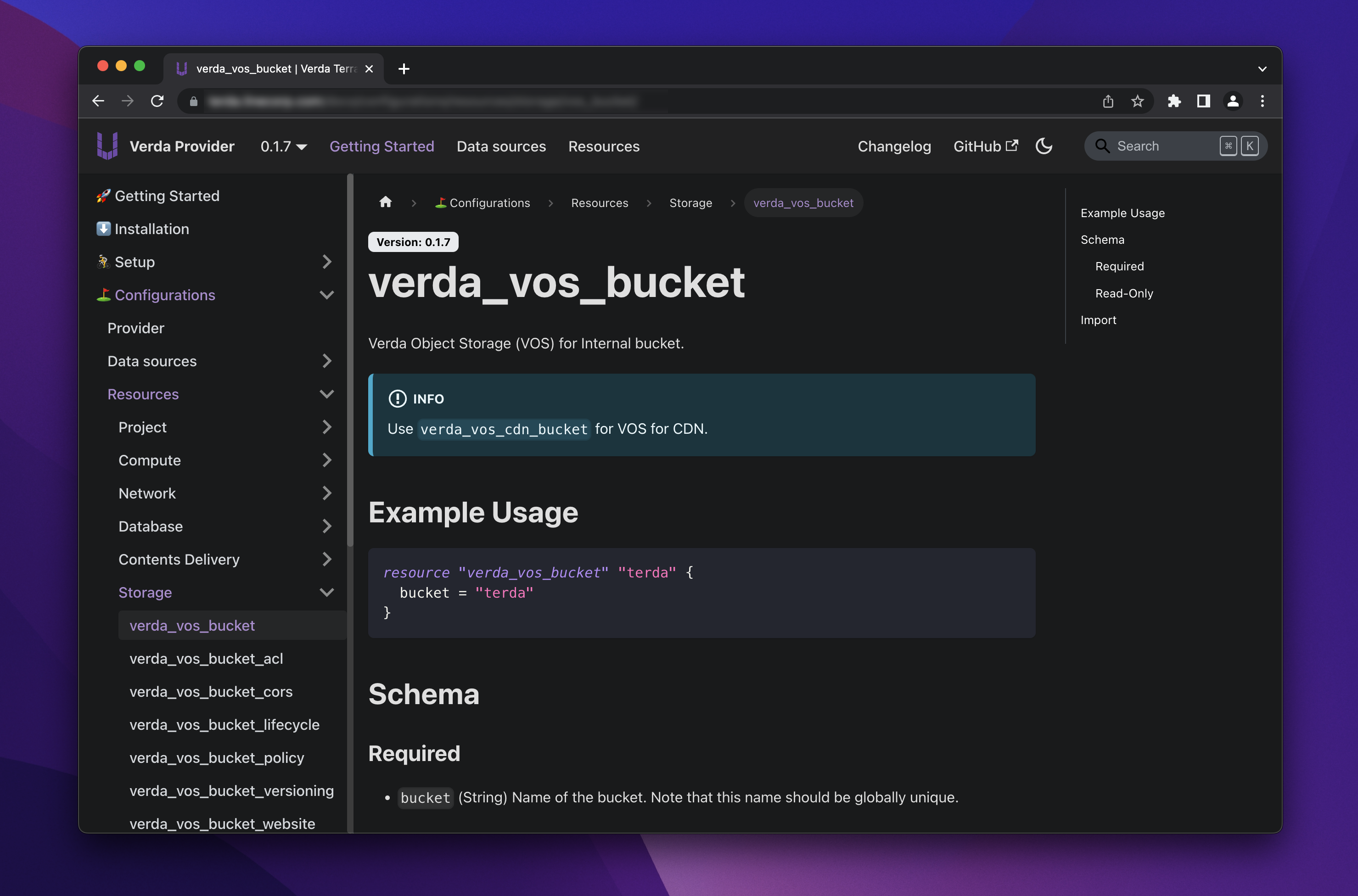

Provider and documentation portal

Since a custom provider is required, implementation of the provider is necessary. Each team at Verda develops and operates their own products, so the situation is decentralized in a sense. Therefore, API endpoints are owned by each service, resulting in a microservice-like architecture. Since the provider needs to call each API properly, it uses OpenStack's Catalog as the source for service discovery, referencing each API endpoint and calling the API. This allows us to properly retrieve multiple region-specific endpoints for each of our multiple products and make API calls related to the developer's resources. Also, because we use a private registry, there is no such thing as a documentation portal provided by Hashicorp.

After generating Markdown with the Terraform Plugin Doc, we apply minor processing and deploy it to our Docusaurus-based documentation site. This process results in a clean, easy-to-read documentation site that supports developer onboarding.

Since the value of Infrastructure as Code is not yet widespread in LINE, we provide all kinds of support on this portal, which includes guides and references.

How it changed our platform (so far)

After officially launching this project, SREs and others who wanted more secure, coded resource management were all over the project. We feel that the true value of team collaboration is now more apparent, as all operations, such as provisioning Verda resources and changing settings through GitHub and now reviewable. Above all, I feel that the developer sentiment regarding Verda resources has changed. As mentioned earlier, LINE has used interactive tools to manage all resources in an imperative manner. Because of this, there was no thought given to the problems with the current way of doing things, and everyone had become used to it. However, in many SaaS and public clouds, such methods are not recommended anymore, and Infrastructure as Code is getting popular. It's already widely accepted, one such example being the communication with GCP customer support at my last job, where we exchanged Terraform files.

As an SRE and platform provider and a member of the organization, I believe that this challenge was a major step in taking Verda to the next stage.

What's next

Infrastructure as Code was only recently introduced, and LINE has resources that have been manually created for as long as it has been in existence. In order to smoothly introduce such a new concept and toolings for LINE, we are planning to take the following actions.

Cultivate the culture of Infrastructure as Code

To make Infrastructure as Code more valuable, it needs to take root as a culture. Terraform has many use cases because it can be easily extended through the plugin mechanism and can be used for a variety of resources. In addition, there are already over 1,600 public providers on Hashicorp's Terraform registry.

Looking at the current state of other public clouds, I feel that many organizations are following the Infrastructure as Code principle even if they have a web console, and I think it's definitely useful and has a certain amount of strong support. I think the value is there, so how we can make it permeate the organization depends on our ability to communicate the value of people as a culture.

We will actively take measures to support all types of onboarding, such as continuous improvement through feedback from pilot teams and other early adopters, improvement of the quality of documentation, and hands-on support.

Support developer migration

As mentioned earlier, LINE has a long history. Even if only new resources are supported by Infrastructure as Code, that is only part of the story, and developers will continue to perform manual operations to update and maintain existing resources. Terraform has import features, but it's challenging to perform migration when vast resources already exist and the value of Infrastructure as Code has not been widely adopted by the organization.

We would like to work on this because we believe it's essential to provide developers with tools like dtan4/terraforming and GoogleCloudPlatform/terraformer that can perform migration in a single step.

I think we can only start working on Infrastructure as Code in a company-wide scope when we can migrate existing resources into Terraform.

Zero Touch Prod

Zero Touch Prod is one of the principle of least privilege goals to be reached, as advocated by Google's SRE and security teams. The permanent or temporary permissions of all humans (including the SREs) are stripped away, with the aim of avoiding risk by changing resources only by indirect means through automation and tools. Infrastructure as Code manages resources by source code, so CI can be used to manipulate resources in the same way as application code.

We believe that we can aim for a more secure and reliable platform because developers do not need direct access to the resources and can make changes through GitHub. This is because Terraform can safely perform operations on behalf of humans.