This is Oklahomer from LINE Corp. In this post, I'd like to explain the architecture behind the chatting function of LINE LIVE, a video streaming service.

Introduction

LINE LIVE for iOS and Android has a chat feature that lets its users send comments in real-time while they are watching a live-streaming video. This not only lets users (or viewers in this case) communicate with each other, but it also lets the streamers connect with their viewers. Streamers can chat with their viewers back and forth, and sometimes plan their videos according to what the viewers say in the chat. This is why the chat is an integral part of the streaming experience.

As you can probably imagine, celebrity live streams attract a large number of viewers, and along with them a torrent of comments. Comments sent to the stream must be simultaneously broadcast to every other viewer, and effectively distributing the load has always been one of our top-priority tasks. There are sometimes 10,000 comments sent per minute on just one stream alone.

We took the possibility of large amounts of comments into consideration when we were developing LINE LIVE and presently have over 100 server instances in operation for the chat feature.

More on the server architecture below.

Overall server architecture

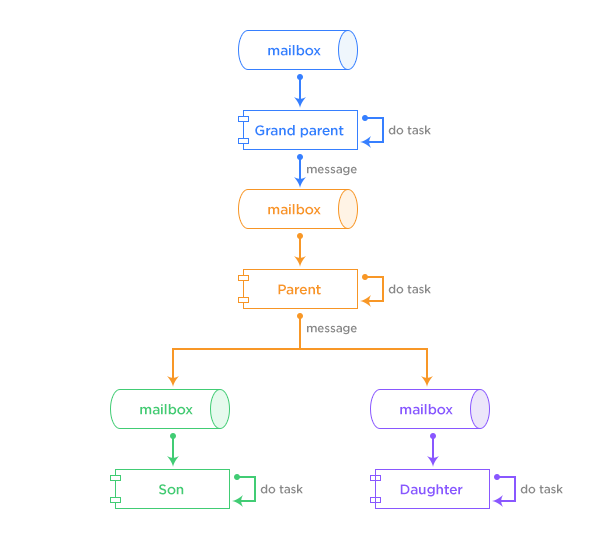

Below is the overall architecture of the server.

Let's take a look at the "chat room," one of the most important aspects when implementing the chat feature. Note how a comment written by Client 1 while connected to Chat Server 1 is sent to Client 2 connected to Chat Server 2.

As mentioned above, a popular stream will have a very large amount of comments. A barrage of comments too overwhelming to even read is an important barometer used to gauge a stream's popularity. However, too many comments can put a heavy load on both the server used for distribution and the client where the comments are displayed. The solution we chose to apply to this problem was to segment the users into separate "chat rooms" so that users can only chat with other users that are in the same chat room. As the chat rooms are distributed among multiple servers, the users' connections are also distributed among multiple servers even if they are in the same chat room.

To achieve this, the chat server architecture has the following three characteristics.

- WebSocket: Communication between the client and server

- High-speed parallel processing using Akka toolkit

- Comment synchronization between servers by using Redis

In the next few sections, let's go over these characteristics in more detail.

WebSocket

Low-latency two-way communication becomes possible on a single connection if you implement WebSocket. By using WebSocket, the server can broadcast the massive tide of comments to the users in real-time. Not only that, sending an HTTP request is no longer required every time a user sends a comment, allowing more resources to be used efficiently.

When messages are transmitted over a single connection, both the servers and clients need to know the payload format to handle payloads properly. This is because they cannot separate the response format by the type of endpoints as they would normally do with Web API. The live chat implementation uses JSON payload format. And we've added one common field to the JSON format that indicates what each payload represents so that every one of them can be mapped to the corresponding class. This approach has enabled us to easily define a new payload type, such as a payload for implementing a pre-paid gift.

Sometimes connections go on and off especially when watching a particularly long live-stream on a mobile device. To prevent such connection problems, we keep watch on the payload transmissions and have the connection disconnect and reconnect when the network seems unstable.

Akka toolkit

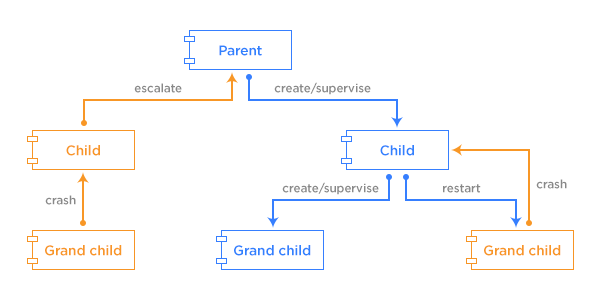

One of the most important aspects of the Akka actor system is the structure between the actors and supervisors. Before talking about the architecture of the chat server, let me first explain the underlying characteristics of the actor system. I will not go over the basics of the actor model here.

Actor

Every actor is a container for state and behavior and is assigned a mailbox for message queues. As the actor's state is hidden and shielded from outside, all interactions of actors rely on the message passing. Actors execute actions defined by the behavior in response to the messages they receive and send out subsequent messages to other actors.

All message passing is handled asynchronously. In other words, once actors send out the preceding message, they can proceed to the next message in their mailbox right away. The key of the actor system is splitting up tasks into smaller units for efficient parallel processing. This allows for actors to process tiny pieces of tasks bit by bit by passing around fine-grained messages to each other. However, if you make a design flaw in the actor system that can trigger an unwanted blocking behavior on the actors, you may end up with piling messages and overflowing mailboxes. Hence, you need to take extra care not to call a blocking API with 3rd party libraries by mistake. In the worst case scenario, the entire processing flow will be blocked, in which case the actor threads will keep running and run out in the end.

Nevertheless, the Akka actor system does have significant benefits. Conceptually, each actor is assigned its own light-weight thread and runs within that thread so there is no chance that one actor will be invoked by multiple threads at the same time. This means you don't need to be concerned about the thread safety of the actor's state. Also, the supervisor hierarchy assures the fault tolerance of the system.

Supervisor

One noteworthy aspect of the actor's lifecycle is that actors can only be created by other actors. This means all actors have a parent-child relationship. When one actor creates another actor, the creating actor becomes a parent (supervisor) and the created actor becomes a child. Naturally, every actor gets to have one supervisor. Also, the Akka actor system adopted a "let-it-crash" model. If a child actor throws an exception, it is escalated to its parent actor, giving the parent actor the responsibility to handle the error. Depending on the exception types, the parent actor may choose the most appropriate response from the following four directives.

- Restart: Restarts the actor. Creates a new instance of the actor and proceeds processing of the next message enqueued in its mailbox.

- Resume: Proceeds processing of the next message enqueued in its mailbox. Whereas "Restart" creates a new instance of an actor, "Resume" reuses the existing actor. "Restart" is used when the actor cannot retain its normal state. Otherwise, "Resume" is used when processing can continue.

- Stop: Stops the actor. The messages remaining in its mailbox at that point will no longer be processed.

- Escalate: When the parent actor cannot handle the exception thrown by its child, it escalates the error to its higher supervisor.

Given that actors may resume or stop, one may think that actors' lifecycle should be taken into account when implementing an application that refers to those actors. However, when actors are created, what they actually return is a reference to the actor called "ActorRef." This means that applications send messages to ActorRef and thus don't need to be concerned about the state of the actual actor whether it be resuming or stopping. This not only makes the implementation more simplified but also lets you build a distributed actor system on multiple servers without having to touch the application code with respect to the location of actors.

Actor system configuration on Chat Server

Below is the simpler depiction of the overall architecture focused on an individual actor.

As you can see, three types of actors - ChatSupervisor, ChatRoomActor, UserActor - interact with each other to broadcast user comments. Their roles are as follows respectively.

- ChatSupervisor: The actor that exists for each JVM. Each JVM has exactly one ChatSupervisor actor. This actor creates and monitors other actors. It also routes the messages incoming from outside to the destination actors. This is the most top level actor. It doesn't have logic and it doesn't concern with individual message handling.

- ChatRoomActor: The actor that is created for each chat room. Various kinds of messages that represent chat room-related events such as comment sending or delivery termination are all routed to this actor. ChatRoomActor is in charge of publishing comments to Redis or storing comments in Redis for comment synchronization between servers (more details on the following section). It also passes to UserActor all messages destined to the clients.

- UserActor: The actor that is created for all users. This actor receives messages from ChatRoomActor and transmits payloads to the clients over a WebSocket connection.

So far, I've explained how Redis works with the chat room and how UserActor sends payloads through a webSocket connection. As I mentioned earlier, it is very important not to trigger a blocking call within the actors. In rare cases where a blocking call is needed, we use asynchronous methods as much as we can to prevent the actor system from overusing the threads.

Using Redis Cluster Pub/Sub

The chat feature uses Redis Cluster for comment synchronization between servers and for temporary storage of comments and metrics data.

Comment synchronization

I already explained that chat rooms can be split depending on the number of users and that users can be distributed across multiple servers even when they are in the same room. However, when chat rooms are spread over multiple servers, you need to find a way to synchronize comments between servers. Akka toolkit provides some features for this and you may consider using Akka Cluster or implementing the event bus mechanism. However, with Akka Cluster, you need to address the issues arising from distributed nodes. Likewise, using the event bus will make deployment more complicated. For these reasons, after looking for a tool both easy to run and easy to implement, we chose Redis Pub/Sub. The following image will help you better understand. Users from the same chat room are connected to the different servers and all the comments generated from the same chat room are synchronized using the Redis Pub/Sub feature.

Using high-speed KVS

Another reason for choosing Redis Cluster is the high availability and scalability it provides. Redis is used as temporary storage for comments and for counting purpose. The rationale behind this approach is to cope with increasing influx of comments. While in live-streaming, Redis Cluster serves as a high-speed read/write in-memory KVS to store comments and gift events. Once the streaming ends, all data is migrated to MySQL for permanent storage. Redis stores events in a sorted set based on the elapsed time of the streaming. This is particularly useful for displaying dozens of the most recent events sorted in time-series when users enter a chat room. Those events are migrated to MySQL afterward and stored in normalized tables.

Redis clients

There are many Redis client libraries you can use with Java. The Redis documentation recommends Jedis, lettuce, and Redisson. Our chat feature adopted lettuce for the following reasons.

- Supports Redis Cluster

- Supports Master/Slave failover and MOVED/ASK redirects, keeps node and hash slot information in a cache up-to-date

- Provides asynchronous API

- Supports failover for Pub/Sub Subscribe connections

- Active development activities

Again, providing asynchronous API capabilities is very important in order to prevent any blocking operation within Akka actors. Also, when using Pub/Sub with ChatRoomActor, we need to monitor the Subscribe connections to ensure that ChatRoomActors retain their subscriptions to the streaming from the streaming start all the way through the end. Lettuce has a feature named ClusterClientOptions. When properly set, this feature can detect if a node is down and refresh the connection with the node by reconnecting. Another benefit of using lettuce is that the connection for Subscribe is created to the node with the least number of clients.

Closing words

This concludes my introduction to the LINE LIVE chatting.

- WebSocket: Real-time two-way messaging between the client and server

- Akka toolkit: High-speed parallel processing servers

- Redis Cluster: Temporary data storage and comment synchronization between servers using Pub/Sub

These are the three main points to be remembered.

As mentioned in the beginning, the chat feature runs with over 100 server instances. As a consequence, we sometimes encounter edge cases with 3rd party libraries while trying to distribute large amounts of comments. We create GitHub issues in such cases and work with the community and developers to fix them or implement workarounds. In a recent example, some signs of malfunction were observed with lettuce after new nodes were added to Redis Cluster. We reported the issue on GitHub and had it fixed.

I would like to end this article by letting you know that LINE is always looking for engineers interested in working with multicultural teams. Become a part of the company!